Bohao LiPh.D. candidateSchool of Data Science The Chinese University of Hong Kong, Shenzhen Shenzhen, China, 518000. Email: libohao1998@gmail.com; Github: https://github.com/Bohao-Lee; Google scholar: https://scholar.google.com |

|

Biography

I am a Ph.D. candidate in the School of Data Science, The Chinese University of Hong Kong, Shenzhen , advised by Prof. Benyou Wang. I got a M.E. degree in University of Chinese Academy of Sciences, Beijing in June 2023, advised by Prof. Qixiang Ye. I got a B.E. degree in Wuhan University, Wuhan in June 2020.

My research interests include computer vision and deep learning, specifically for few-shot learning and multimodal.

Academic Services

Journal Reviewer: IEEE TPAMI, IJCV, IEEE TNNLS, IEEE TCSVT, TMLR, etc.

ICML 2024 Workshop on Multi-modal Foundation Model meets Embodied AI(MFM-EAI) Challenge Organizer. [Link]

News

[2024.08] Frequency-Aware Divide-and-Conquer for Efficient Real Noise Removal is accepted by TNNLS.

[2024.06] Explicit Margin Equilibrium for Few-Shot Object Detection is accepted by TNNLS.

[2024.02] SEED-Bench-2: Benchmarking Multimodal Large Language Models is accepted by CVPR2024.

[2023.10] Proposal distribution calibration for few-shot object detection is accepted by TNNLS.

[2023.02] Prompt, generate, then cache: Cascade of foundation models makes strong few-shot learners is accepted by CVPR2023.

[2021.06] Part-based semantic transform for few-shot semantic segmentation is accepted by TNNLS.

[2021.02] Beyond Max-Margin: Class Margin Equilibrium for Few-shot Object Detection is accepted by CVPR2021.

[2020.08] Prototype mixture models for few-shot semantic segmentation is accepted by ECCV2020.

Publications

|

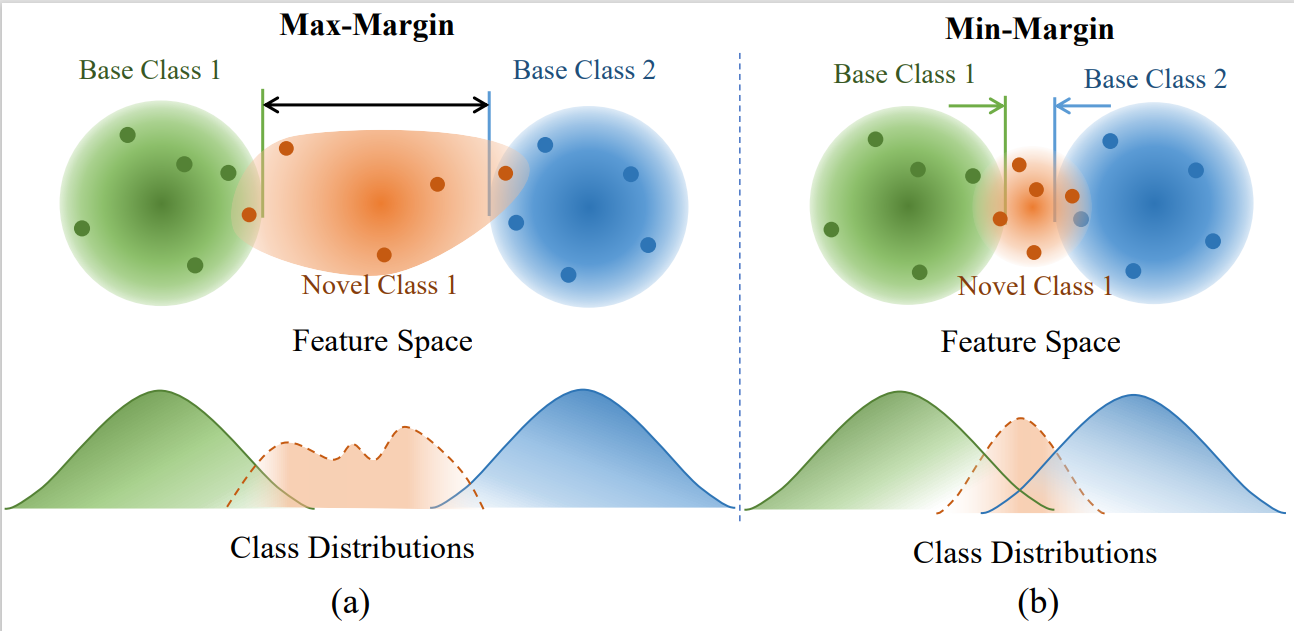

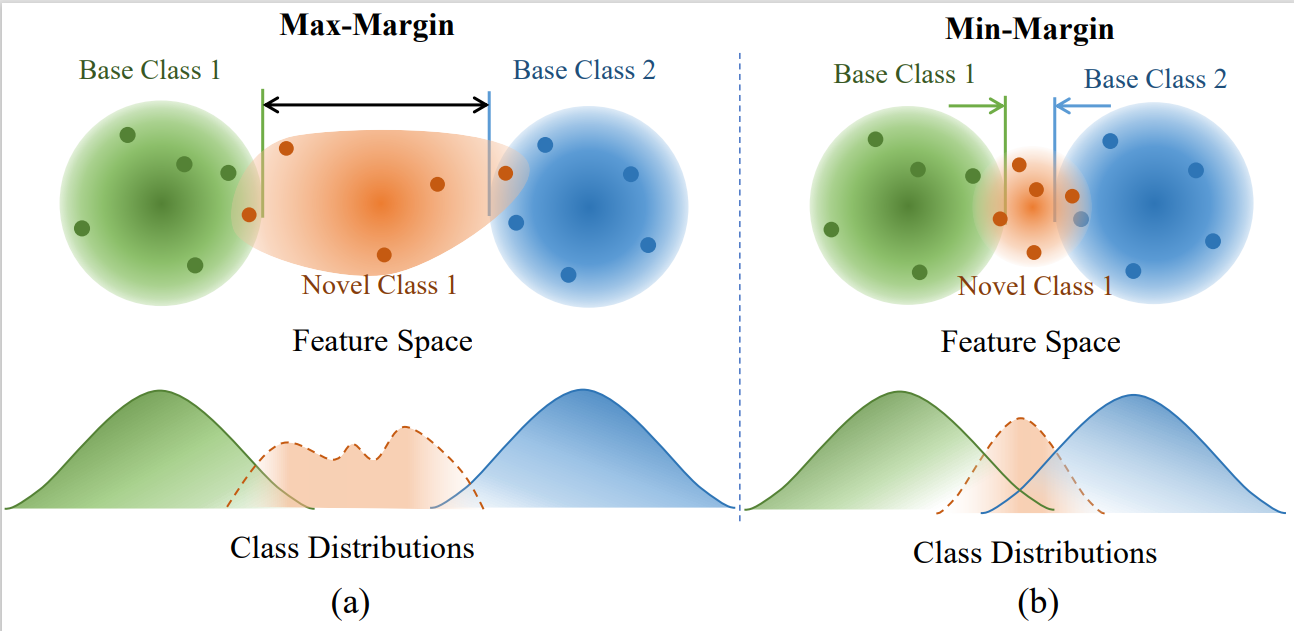

* Chang Liu, * Bohao Li, Mengnan Shi, Xiaozhong Chen, Qixiang Ye, Xiangyang Ji

Explicit Margin Equilibrium for Few-Shot Object Detection IEEE Transactions on Neural Networks and Learning Systems, 2025 Journal Version of Beyond Max-Margin: Class Margin Equilibrium for Few-shot Object Detection. [Paper] [Code]

|

|

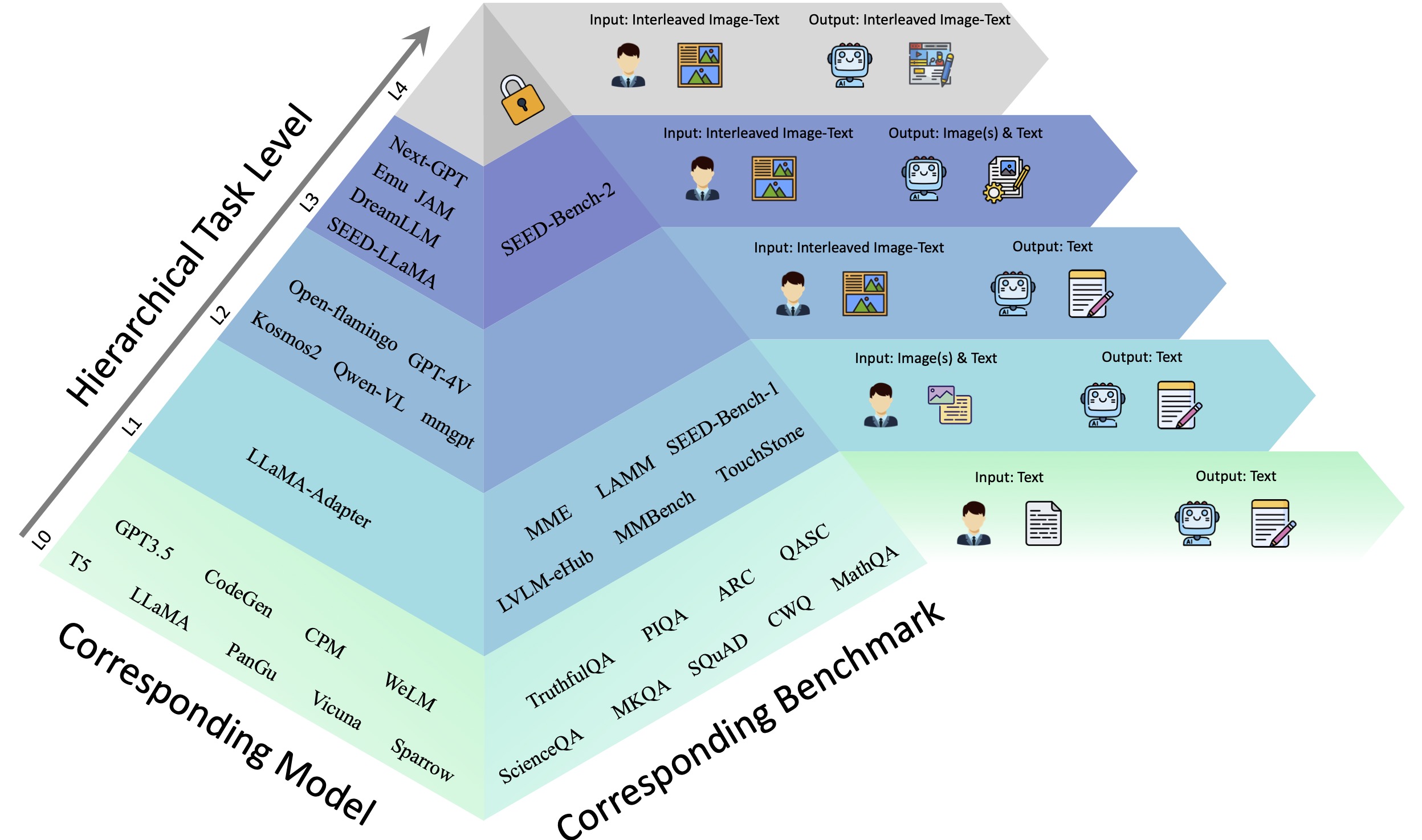

Bohao Li, Yuying Ge, Yixiao Ge, Ying Shan, Ruimao Zhang

SEED-Bench-H: Hierarchical Benchmarking Multimodal Large Language Models [Paper] [Dataset] [Code]

|

|

Bohao Li, Yuying Ge, Yi Chen, Yixiao Ge, Ruimao Zhang, Ying Shan

SEED-Bench-2-Plus: Benchmarking Multimodal Large Language Models with Text-Rich Visual Comprehension [Paper] [Dataset] [Code]

|

|

*Bohao Li, *Yuying Ge, Yixiao Ge, Guangzhi Wang, Rui Wang, Ruimao Zhang, Ying Shan

SEED-Bench-2: Benchmarking Multimodal Large Language Models IEEE Conference on Computer Vision and Pattern Recognition, 2024 TechBeat Community's 2024 Popular Technology Work [Link] [Paper] [Dataset] [Code] [Leaderborad]

|

|

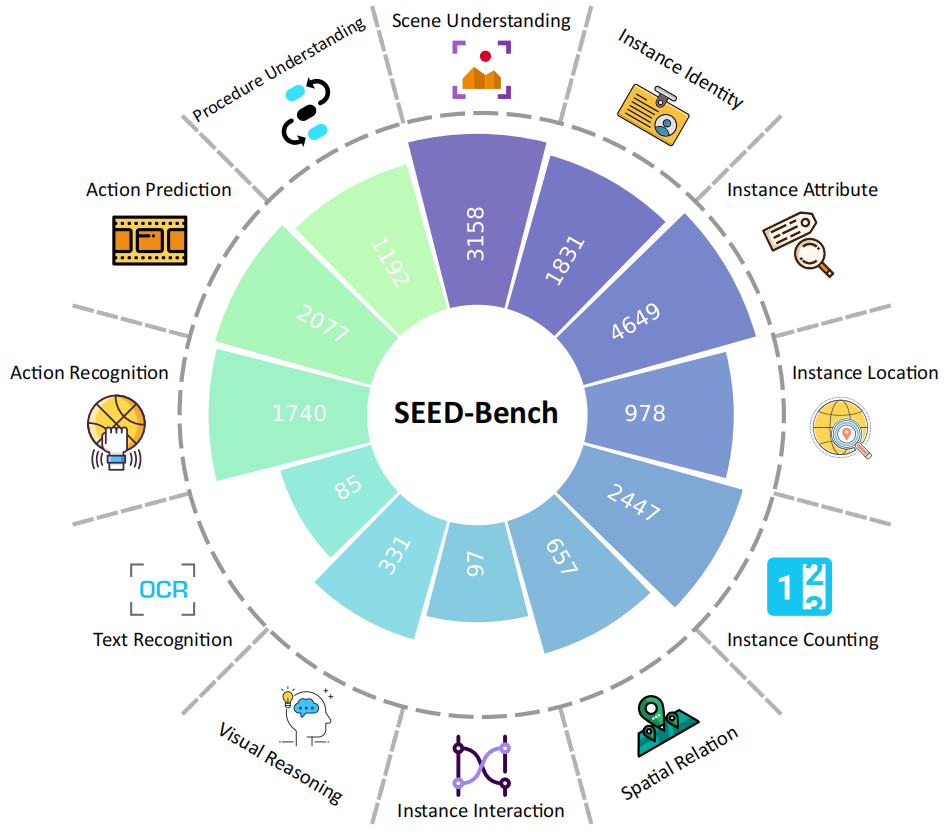

*Bohao Li, *Rui Wang, *Guangzhi Wang, Yuying Ge, Yixiao Ge, Ying Shan

Seed-bench: Benchmarking multimodal llms with generative comprehension [Paper] [Dataset] [Code] [Leaderborad]

|

|

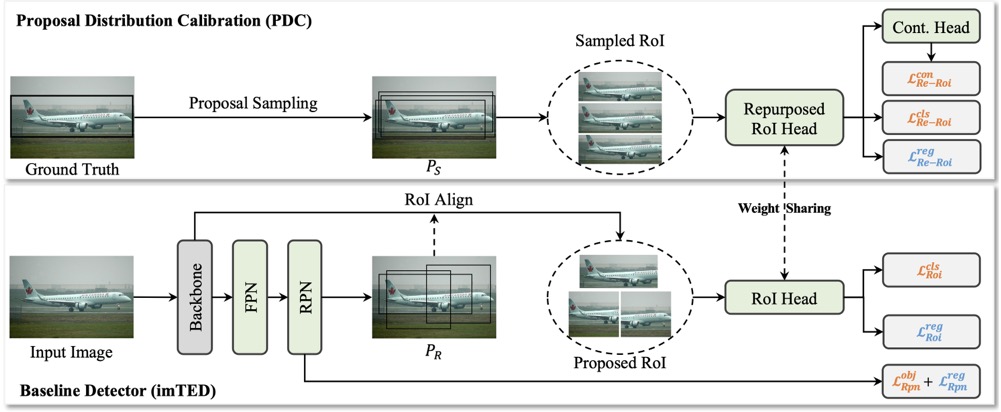

Bohao Li, Chang Liu, Mengnan Shi, Xiaozhong Chen, Xiangyang Ji, Qixiang Ye

Proposal Distribution Calibration for Few-Shot Object Detection IEEE Transactions on Neural Networks and Learning Systems, 2024 [Paper] [Code]

|

|

Bohao Li, Boyu Yang, Chang Liu, Feng Liu, Rongrong Ji, Qixiang Ye

Beyond Max-Margin: Class Margin Equilibrium for Few-shot Object Detection IEEE Conference on Computer Vision and Pattern Recognition, 2021 [Paper] [Code]

|

|

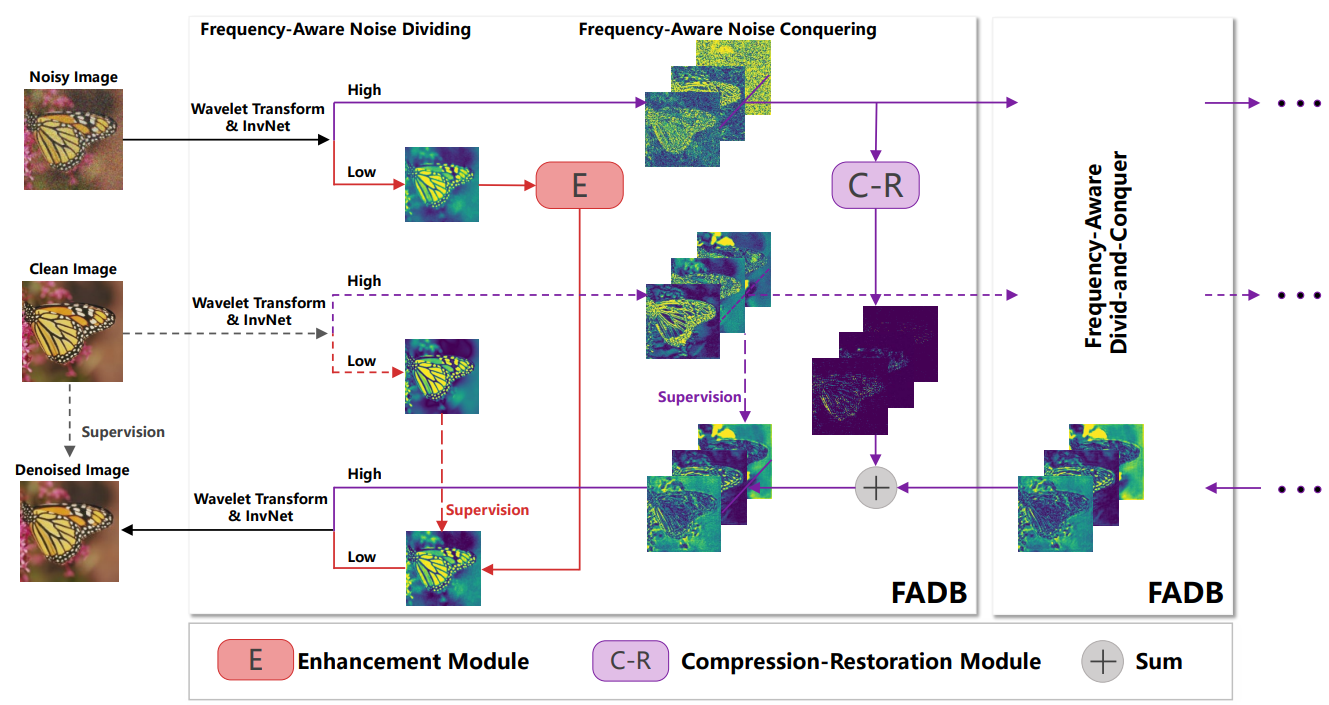

* Yunqi Huang, * Chang Liu, * Bohao Li, Hai Huang, Ronghui Zhang, Wei Ke, Xiaojun Jing

Frequency-Aware Divide-and-Conquer for Efficient Real Noise Removal IEEE Transactions on Neural Networks and Learning Systems, 2025 [Paper] |

|

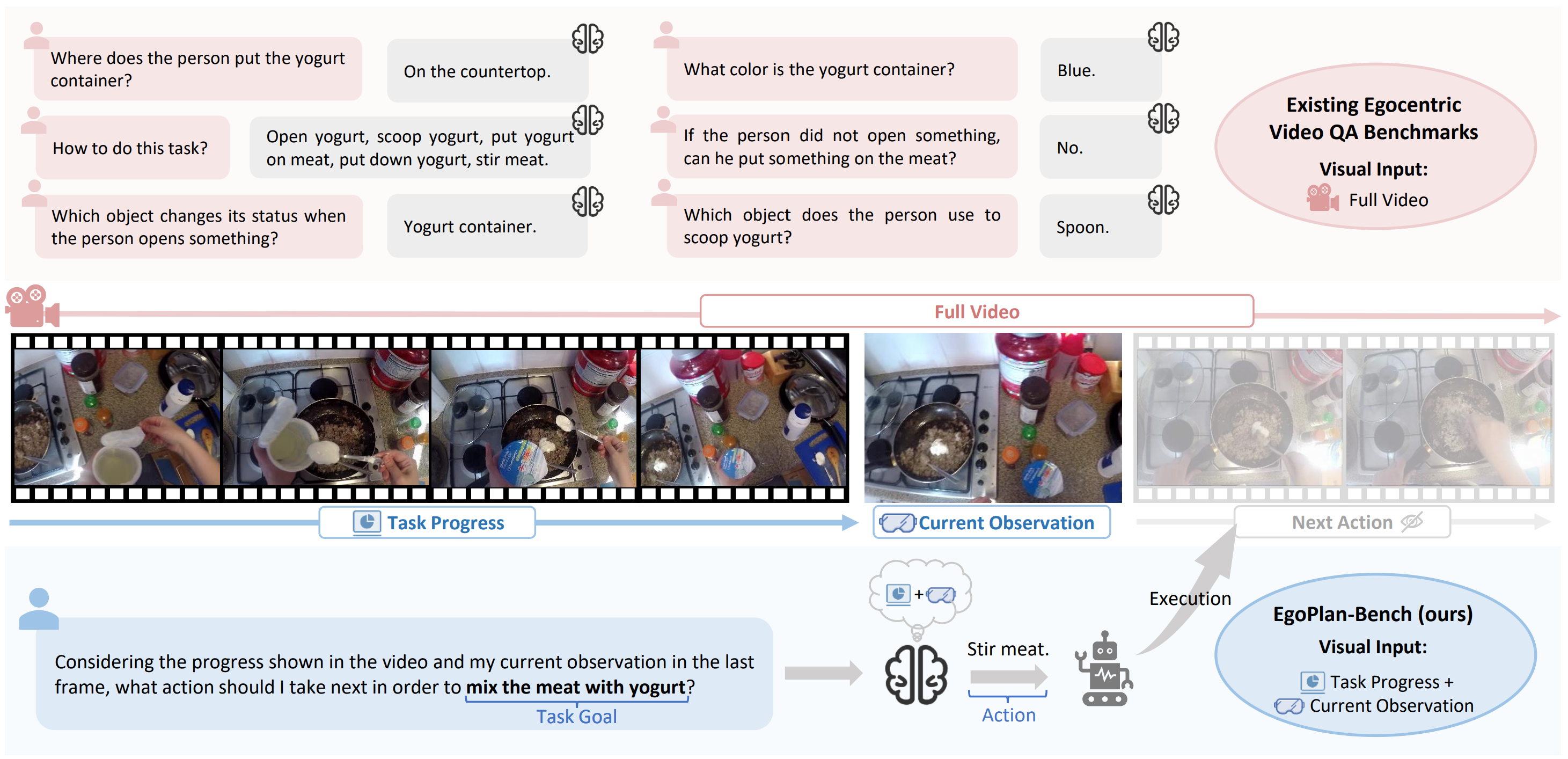

Chen Yi, Yuying Ge, Yixiao Ge, Mingyu Ding, Bohao Li, Rui Wang, Ruifeng Xu, Ying Shan, Xihui Liu

EgoPlan-Bench: Benchmarking Egocentric Embodied Planning with Multimodal Large Language Models [Project] [Paper] [Dataset] [Code]

|

|

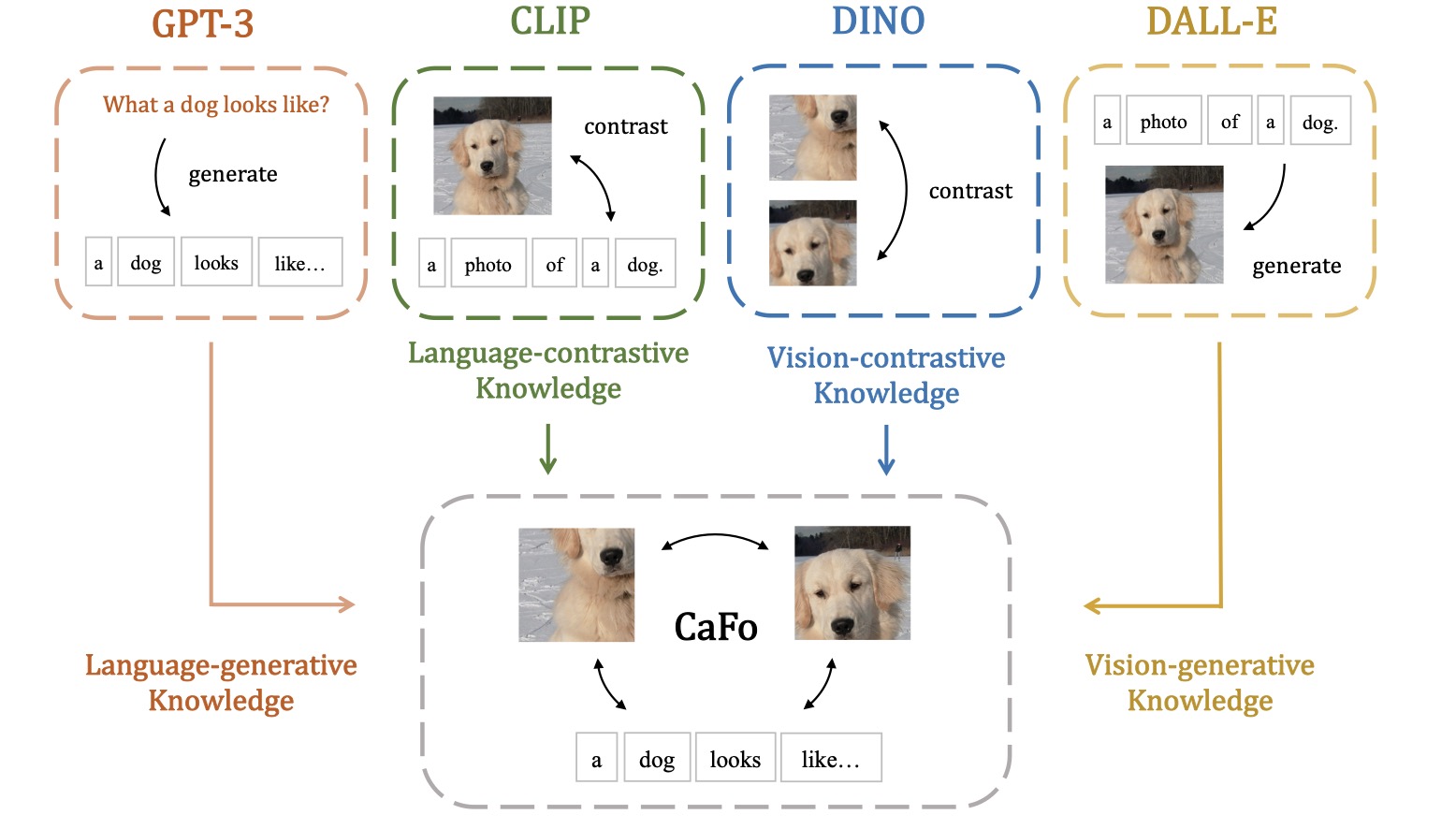

Renrui Zhang, Xiangfei Hu, Bohao Li, Siyuan Huang, Hanqiu Deng, Yu Qiao, Peng Gao, Hongsheng Li

Prompt, generate, then cache: Cascade of foundation models makes strong few-shot learners IEEE Conference on Computer Vision and Pattern Recognition, 2023 [Paper] [Code]

|

|

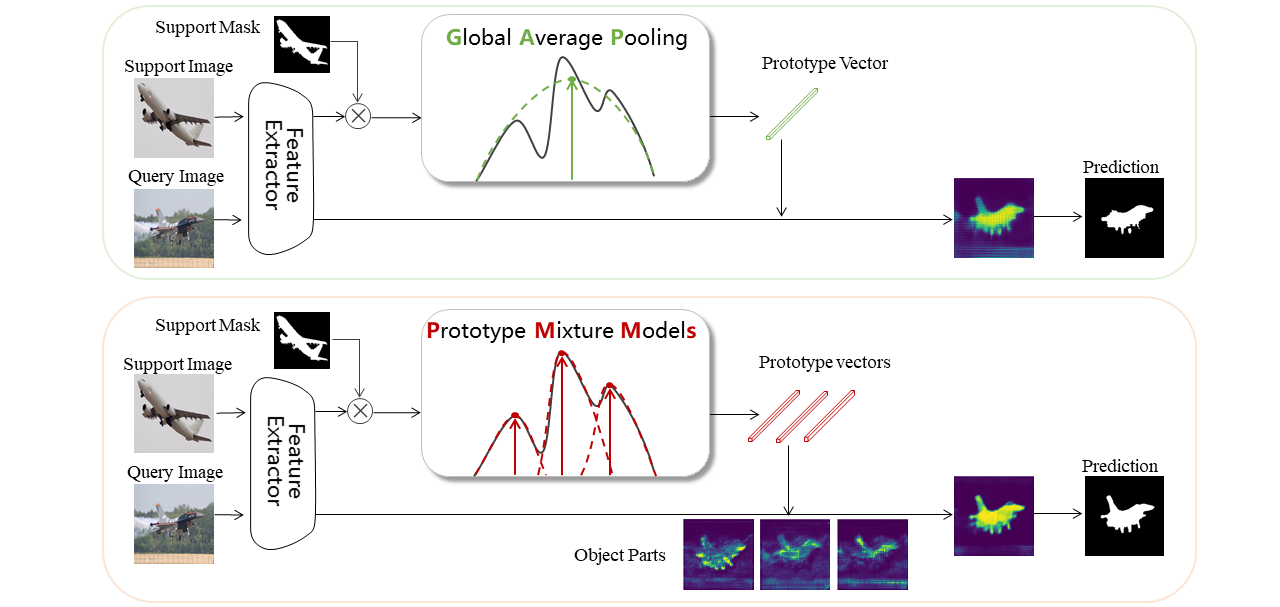

Boyu Yang, Chang Liu, Bohao Li, Jianbin Jiao, Qixiang Ye

Prototype mixture models for few-shot semantic segmentation European Conference on Computer Vision (ECCV), 2020 [Paper] [Code]

|

|

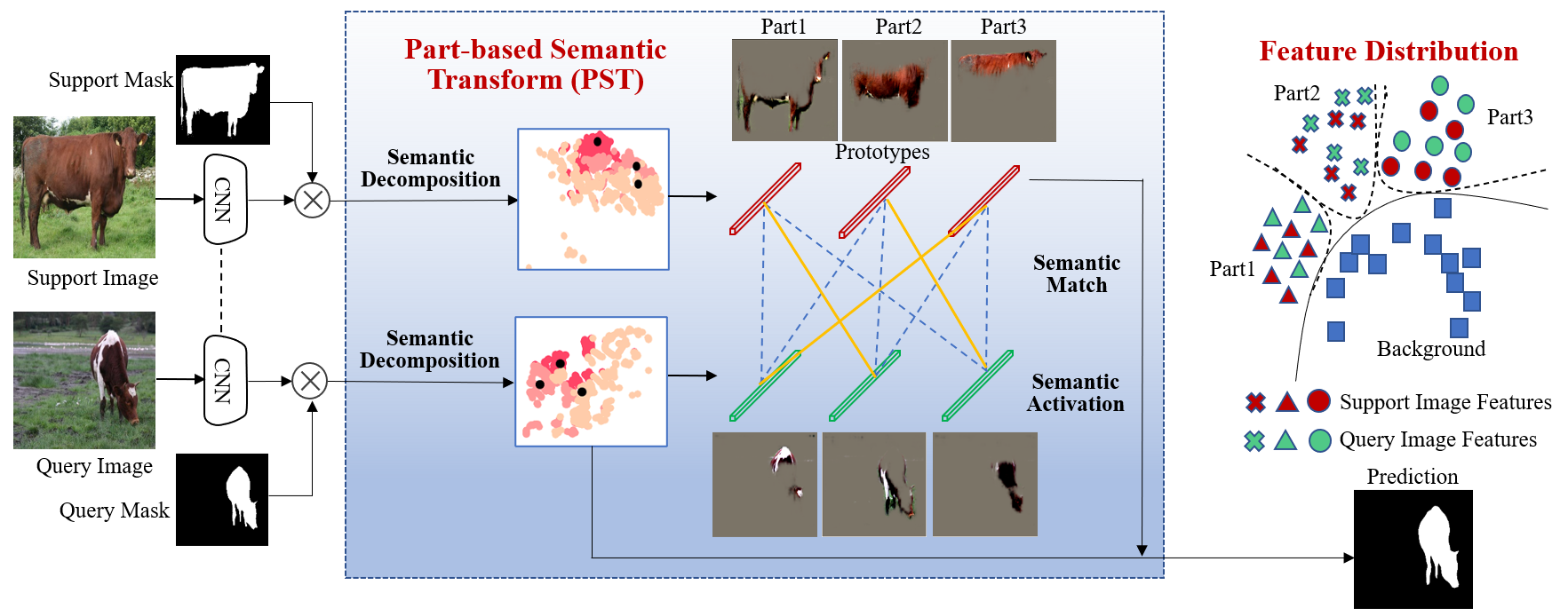

Boyu Yang, Fang Wan, Chang Liu, Bohao Li, Xiangyang Ji, Qixiang Ye

Part-based semantic transform for few-shot semantic segmentation IEEE Transactions on Neural Networks and Learning Systems, 2021 [Paper] [Code]

|